This is a blog post covering how to connect a firecracker VM to network block storage. Read Part 2 here.

In this post, I’ll walk you through how I connected a firecracker VM to a network attached block storage system by called crucible.

Crucible is written by the good folks at Oxide Computer, to power network attached block volumes in their custom cloud based server rack. It has a lot of desirable properties that make it a ripe target to integrate with the firecracker VMM:

- It’s written in Rust, the same as firecracker itself, easing integration.

- Oxide’s own VMM (called propolis), hooks into the crucible storage system using a similar VirtIO device layer as firecracker (Along with an NVMe interface).

- It’s simple. Other storage systems like Ceph are great, but have a lot more moving pieces that are harder to work with.

Oxide’s rack platform is based on the illumos unix operating system, with bhyve as the underlying hypervisor. If we want to target firecracker on Linux, we’ll need to add some additional plumbing.

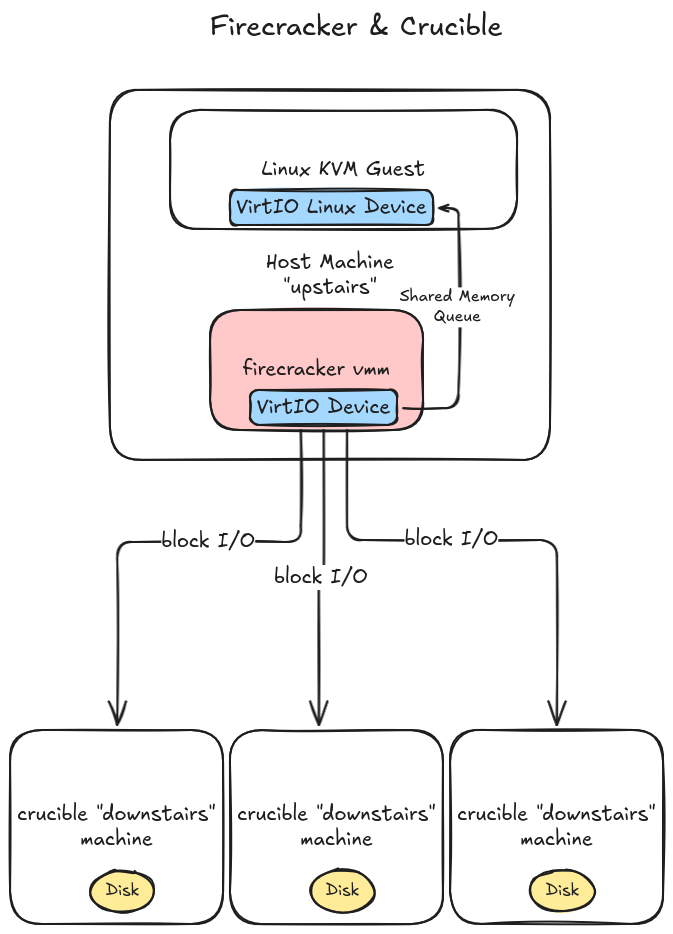

Here’s a rough sketch of what we’re going for:

.

.

Let’s take a look at the main crucible BlockIO interface, and unpack its basic operations so we can understand our integration surface area.

Crucible BlockIO Interface

The BlockIO interface is the main entrypoint for all block operations to crucible. It exposes simple read, write, and flush operations using an async Rust trait:

/// The BlockIO trait behaves like a physical NVMe disk (or a virtio virtual

/// disk): there is no contract about what order operations that are submitted

/// between flushes are performed in.

#[async_trait]

pub trait BlockIO: Sync {

/*

* `read`, `write`, and `write_unwritten` accept a block offset, and data

* buffer size must be a multiple of block size.

*/

async fn read(

&self,

offset: BlockIndex,

data: &mut Buffer,

) -> Result<(), CrucibleError>;

async fn write(

&self,

offset: BlockIndex,

data: BytesMut,

) -> Result<(), CrucibleError>;

async fn write_unwritten(

&self,

offset: BlockIndex,

data: BytesMut,

) -> Result<(), CrucibleError>;

async fn flush(

&self,

snapshot_details: Option<SnapshotDetails>,

) -> Result<(), CrucibleError>;

}

In the firecracker code, we’re going to wire up our VirtIO storage operations to crucible’s Volume type.

This implements the BlockIO trait interface, and also abstracts away the concept of “subvolumes” (useful for building layered block devices).

Let’s add the bare minimum to firecracker to get a virtio disk to perform no-op block operations, and emit log lines to the firecracker machine log.

From firecracker/src/vmm/src/devices/virtio/block/virtio/io/crucible.rs:

use crate::logger::debug;

use crate::vstate::memory::{GuestAddress, GuestMemoryMmap};

#[derive(Debug, displaydoc::Display)]

pub enum CrucibleError {

}

#[derive(Debug)]

pub struct CrucibleEngine {

}

impl CrucibleEngine {

pub fn read(

&mut self,

offset: u64,

mem: &GuestMemoryMmap,

addr: GuestAddress,

count: u32,

) -> Result<u32, CrucibleError> {

debug!("Crucible read. offset: {}, addr: {:?}, count: {}", offset, addr, count);

Ok(0)

}

pub fn write(

&mut self,

offset: u64,

mem: &GuestMemoryMmap,

addr: GuestAddress,

count: u32,

) -> Result<u32, CrucibleError> {

debug!("Crucible write. offset: {}, addr: {:?}, count: {}", offset, addr, count);

Ok(0)

}

pub fn flush(&mut self) -> Result<(), CrucibleError> {

debug!("Crucible flush.");

Ok(())

}

}

We’ll be temporarily hooking up our CrucibleEngine type to the firecracker FileEngine type.

This is a temporary hack, to work around the fact that firecracker only supports host files for its block storage backend (no network volume support in sight!). Since we want to eventually hook firecracker

up to crucible over the network, we’ll need to refactor this out to a cleaner interface down the road.

For now though, we’ll just add enum variants of FileEngine, FileEngineType, and a few other error and metadata types throughout the existing firecracker VirtIO machinery.

diff --git a/src/vmm/src/devices/virtio/block/virtio/io/mod.rs b/src/vmm/src/devices/virtio/block/virtio/io/mod.rs

index 09cc7c4e31..c5d9880f25 100644

--- a/src/vmm/src/devices/virtio/block/virtio/io/mod.rs

+++ b/src/vmm/src/devices/virtio/block/virtio/io/mod.rs

@@ -2,6 +2,7 @@

// SPDX-License-Identifier: Apache-2.0

pub mod async_io;

+pub mod crucible;

pub mod sync_io;

use std::fmt::Debug;

@@ -9,6 +10,8 @@

pub use self::async_io::{AsyncFileEngine, AsyncIoError};

pub use self::sync_io::{SyncFileEngine, SyncIoError};

+pub use self::crucible::{CrucibleEngine, CrucibleError};

+

use crate::devices::virtio::block::virtio::PendingRequest;

use crate::devices::virtio::block::virtio::device::FileEngineType;

use crate::vstate::memory::{GuestAddress, GuestMemoryMmap};

@@ -31,6 +34,8 @@

Sync(SyncIoError),

/// Async error: {0}

Async(AsyncIoError),

+ /// Crucible error: {0}

+ Crucible(CrucibleError),

}

impl BlockIoError {

@@ -54,6 +59,7 @@

#[allow(unused)]

Async(AsyncFileEngine),

Sync(SyncFileEngine),

+ Crucible(CrucibleEngine),

}

diff --git a/src/vmm/src/devices/virtio/block/virtio/device.rs b/src/vmm/src/devices/virtio/block/virtio/device.rs

index ecdd8ee4f6..61ce02911d 100644

--- a/src/vmm/src/devices/virtio/block/virtio/device.rs

+++ b/src/vmm/src/devices/virtio/block/virtio/device.rs

@@ -50,6 +50,9 @@

/// Use a Sync engine, based on blocking system calls.

#[default]

Sync,

+

+ // Use a Crucible, remote network block storage backend.

+ Crucible,

}

You can see a more exhaustive change of stubbing out the basic virtio interfaces in this commit.

Now let’s try starting a firecracker VM, and have it use our new Crucible FileEngineType. I recommend following the firecracker getting-started docs to download a minimal linux kernel, and

a simple ubuntu 24.04 rootfs that we can use for booting.

From firecracker/scripts/test_machine.json:

{

"boot-source": {

"kernel_image_path": ".kernel/vmlinux-6.1.141.1",

"boot_args": "console=ttyS0 reboot=k panic=1 pci=off"

},

"logger": {

"log_path": "test_machine.log",

"level": "debug"

},

"drives": [

{

"drive_id": "rootfs",

"path_on_host": ".kernel/ubuntu-24.04.ext4",

"is_root_device": true,

"is_read_only": false

},

{

"drive_id": "storage",

"path_on_host": "storage.ext4",

"is_root_device": false,

"is_read_only": false,

"io_engine": "Crucible"

}

],

"machine-config": {

"vcpu_count": 1,

"mem_size_mib": 1024

}

}

Note a few things going on here:

- Most of this is standard firecracker configuration. We use both the linux kernel image, and ubuntu rootfs from the firecracker getting started docs above.

- In addition to our rootfs, we attach an additional disk, and then specify an

io_engineofCrucible. - We setup debug logging to a file that resides on the host, so we can see debug output from our storage calls and other debug info within the vmm.

Eagle eyed readers will notice that we’re kludging onto the existing path_on_host FileEngine configuration parameter. This is temporarily required for the firecracker I/O pipeline

to respond to other virtio protocol operations such as detecting the total disk size. We’ll fix this down the road, but for now, let’s write out a 100 megabyte ext4 volume on our host

machine.

$ dd if=/dev/zero of=storage.ext4 bs=1M count=100

$ mkfs.ext4 storage.ext4

Let’s run this thing!

From the firecracker root:

$ cargo run --bin firecracker -- --api-sock /tmp/fc0.sock --config-file ./scripts/test_machine.json

After the VM boots, you’ll see our stubbed out crucible log lines in test_machine.log:

$ cat test_machine.log

2025-10-11T17:23:52.669778605 [anonymous-instance:main] Successfully started microvm that was configured from one single json

2025-10-11T17:23:52.678229324 [anonymous-instance:fc_vcpu 0] vcpu: IO write @ 0xcf8:0x4 failed: bus_error: MissingAddressRange

2025-10-11T17:23:52.678273670 [anonymous-instance:fc_vcpu 0] vcpu: IO read @ 0xcfc:0x2 failed: bus_error: MissingAddressRange

2025-10-11T17:23:52.843229440 [anonymous-instance:fc_vcpu 0] vcpu: IO read @ 0x87:0x1 failed: bus_error: MissingAddressRange

2025-10-11T17:23:52.871489216 [anonymous-instance:main] Crucible read. offset: 0, addr: GuestAddress(60973056), count: 4096

2025-10-11T17:23:52.871522428 [anonymous-instance:main] Failed to execute In virtio block request: PartialTransfer { completed: 0, expected: 4096 }

2025-10-11T17:23:53.817540784 [anonymous-instance:main] Crucible read. offset: 0, addr: GuestAddress(91717632), count: 4096

2025-10-11T17:23:53.817570964 [anonymous-instance:main] Failed to execute In virtio block request: PartialTransfer { completed: 0, expected: 4096 }

2025-10-11T17:23:53.820961329 [anonymous-instance:main] Crucible read. offset: 0, addr: GuestAddress(91717632), count: 4096

2025-10-11T17:23:53.820968243 [anonymous-instance:main] Failed to execute In virtio block request: PartialTransfer { completed: 0, expected: 4096 }

Success! We can see our log lines from the machine log due to failed I/O calls. We’ve got our first toe-hold onto the main firecracker block I/O path.

VirtIO Internals

Before we implement the interface with real block reads, writes and flushes, let’s clarify a few inner details about how VirtIO block devices fit into our overall scheme to share data back and forth between the guest VM and our storage backend.

A key thing to know about VirtIO host device implementations is that they’re cooperative with the underlying VMM and hypervisor. In the case of firecracker the setup looks something like this:

- Firecracker controls and drives KVM. KVM acts as the actual VM hypervisor, running inside the Linux kernel, with Firecracker managing it.

- VirtIO devices are registered with the guest VM usually via an emulated PCI bus. Linux and most operating systems have standard VirtIO device drivers that the guest loads and communicates over this PCI bus.

- During PCI protocol negotiation, the host and guest share I/O registers that can be composed into VirtIO queues. This shared memory mapping of a queue data structure is how host and guest will pass block operations back and forth to each other. Firecracker is responsible for establishing the shared VirtIO queues and mapping them into guest memory.

- When guests read / write, they read and write data to the shared memory queues

- The firecracker VMM, running in host user space, reads the shared memory queues which are mapped to virtual devices held in the firecracker process memory space, and ultimately processed through the

FileEnginemachinery we touched on before.

The beauty of VirtIO is its simplicity: Guest drivers can be generic, and simply pass buffers back and forth to the VMM through hypervisor translated physical / virtual address space. Our Rust code in user space can then directly translate these VirtIO block data reads and writes into crucible block reads / writes over the network. This saves us the headache of writing custom Linux block devices that would need to interface directly with crucible. VirtIO acts as our “bridge layer” between hypervisor, VMM, and our guest. Huzzah!

Block Reads from Memory

With a better picture of VirtIO guest / host interactions, let’s plug in an “in-memory” implementation of the BlockIO interface. This won’t yet get us to network attached storage, but

it will help us correctly bridge between firecracker and the BlockIO interface.

Below is the core read / write / flush methods filled out in CrucibleEngine.

From src/vmm/src/devices/virtio/block/virtio/io/crucible.rs:

#[derive(Debug)]

pub struct CrucibleEngine {

volume: Volume,

block_size: u64,

rt: Arc<Runtime>,

buf: crucible::Buffer,

}

impl CrucibleEngine {

// Translates firecracker I/O reads into crucible `BlockIO` reads.

pub fn read(

&mut self,

offset: u64,

mem: &GuestMemoryMmap,

addr: GuestAddress,

count: u32,

) -> Result<u32, anyhow::Error> {

debug!("Crucible read. offset: {}, addr: {:?}, count: {}", offset, addr, count);

// Ensure we can fetch the region of memory before we attempt any crucible reads.

let mut slice = mem.get_slice(addr, count as usize)?;

let (off_blocks, len_blocks) =

Self::block_offset_count(offset as usize, count as usize, self.block_size as usize)?;

self.buf.reset(len_blocks, self.block_size as usize);

// Because firecracker doesn't have an async runtime, we must explicitly

// block waiting for a crucible read call.

let _ = self.rt.block_on(async {

self.volume.read(off_blocks, &mut self.buf).await

})?;

let mut buf: &[u8] = &self.buf;

// Now, map the read crucible blocks into VM memory

buf.read_exact_volatile(&mut slice)?;

Ok(count)

}

// Translates firecracker I/O writes into crucible `BlockIO` writes.

pub fn write(

&mut self,

offset: u64,

mem: &GuestMemoryMmap,

addr: GuestAddress,

count: u32,

) -> Result<u32, anyhow::Error> {

debug!("Crucible write. offset: {}, addr: {:?}, count: {}", offset, addr, count);

let slice = mem.get_slice(addr, count as usize)?;

let (off_blocks, len_blocks) =

Self::block_offset_count(offset as usize, count as usize, self.block_size as usize)?;

let mut data = Vec::with_capacity(count as usize);

data.write_all_volatile(&slice)?;

let mut buf: crucible::BytesMut = crucible::Bytes::from(data).into();

let _ = self.rt.block_on(async {

self.volume.write(off_blocks, buf).await

})?;

Ok(count)

}

// Translates firecracker I/O flushes into crucible `BlockIO` flushes.

pub fn flush(&mut self) -> Result<(), anyhow::Error> {

debug!("Crucible flush.");

Ok(self.rt.block_on(async {

self.volume.flush(None).await

})?)

}

At a high level, we’re simply translating / mapping

guest memory, and filling it with data from the underlying Volume (volume is what implements BlockIO).

One very not fun thing: firecracker doesn’t use async Rust at all, so we’re going to have to inject a tokio runtime if we want

to use the existing crucible interfaces. When we’re making I/O calls into crucible (and potentially performing operations over the network), we have to use

block_on calls from the tokio async Runtime.

For read operations, we utilize a shared crucible::Buffer to copy memory to / from the guest memory, and then hand the request off

to crucible.

For now, we’re just going to attach in-memory subvolumes with our underlying block memory (obviously not durable!).

impl CrucibleEngine {

pub async fn in_memory_volume(block_size: u64, disk_size: usize) -> Result<Volume, anyhow::Error> {

let volume_logger = crucible_common::build_logger_with_level(slog::Level::Debug);

let mut builder = VolumeBuilder::new(block_size, volume_logger);

let disk = Arc::new(InMemoryBlockIO::new(

Uuid::new_v4(),

block_size,

disk_size,

));

builder.add_subvolume(disk).await?;

Ok(Volume::from(builder))

}

}

When we fire up a new VM now, the calls actually work, and we’re “persisting” real block data!

root@ubuntu-fc-uvm:~# lsblk

NAME

MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

vda 254:0 0 1G 0 disk /

vdb 254:16 0 100M 0 disk

root@ubuntu-fc-uvm:~# mkfs.ext4 /dev/vdb

mke2fs 1.47.0 (5-Feb-2023)

Creating filesystem with 25600 4k blocks and 25600 inodes

Allocating group tables: done

Writing inode tables: done

Creating journal (1024 blocks): done

Writing superblocks and filesystem accounting information: done

root@ubuntu-fc-uvm:~# mkdir -p /mnt/storage

root@ubuntu-fc-uvm:~# mount -t ext4 /dev/vdb /mnt/storage/

root@ubuntu-fc-uvm:~# ls -lah /mnt/storage/

total 24K

drwxr-xr-x 3 root root 4.0K Oct 13 01:43 .

drwxr-xr-x 3 root root 4.0K Oct 11 22:15 ..

drwx------ 2 root root 16K Oct 13 01:43 lost+found

root@ubuntu-fc-uvm:~# echo "Hello crucible!" > /mnt/storage/hello

root@ubuntu-fc-uvm:~# cat /mnt/storage/hello

Hello crucible!

root@ubuntu-fc-uvm:~#

If we peek back at our test_machine.log, we can see the debug output of the block operations:

2025-10-12T20:44:07.112380529 [anonymous-instance:main] Crucible read. offset: 61440, addr: GuestAddress(87900160), count: 4096

2025-10-12T20:44:13.100879724 [anonymous-instance:main] Crucible write. offset: 98304, addr: GuestAddress(84340736), count: 4096

2025-10-12T20:44:13.101295591 [anonymous-instance:main] Crucible write. offset: 102400, addr: GuestAddress(105701376), count: 4096

2025-10-12T20:44:13.102050504 [anonymous-instance:main] Crucible write. offset: 106496, addr: GuestAddress(86339584), count: 4096

2025-10-12T20:44:21.115309239 [anonymous-instance:main] Crucible read. offset: 122880, addr: GuestAddress(85647360), count: 4096

What’s Next

We’ve now got firecracker and crucible interfaced together. We took incremental steps as we discovered the ins-and-outs of both the firecracker codebase, as well as the various crucible interfaces.

In an upcoming post (UPDATE: Next post is here), we’ll wire these calls into the crucible “downstairs” network server. In crucible lingo, the “downstairs” is the component responsible for managing the block data through underlying files in ZFS on disk. This will give us true data persistence, as well as block device portability across VM restarts.

Our goal will eventually be to orchestrate multiple firecracker VMs via similar saga lifecycle management as the Oxide control plane: omnicron. Then there’s nothing stopping us from shoving this all in a basement homelab rack, and seeing if we can get an experimental multi-machine VM setup going.

All the code is up on GitHub.